Business and Data Analytics

What is Data Visualization?

Data visualization translates complex data sets into visual formats that are easier for the human brain to comprehend. This can include a variety of visual tools such as:

- Charts: Bar charts, line charts, pie charts, etc.

- Graphs: Scatter plots, histograms, etc.

- Maps: Geographic maps, heat maps, etc.

- Dashboards: Interactive platforms that combine multiple visualizations.

The primary goal of data visualization is to make data more accessible and easier to interpret, allowing users to identify patterns, trends, and outliers quickly.

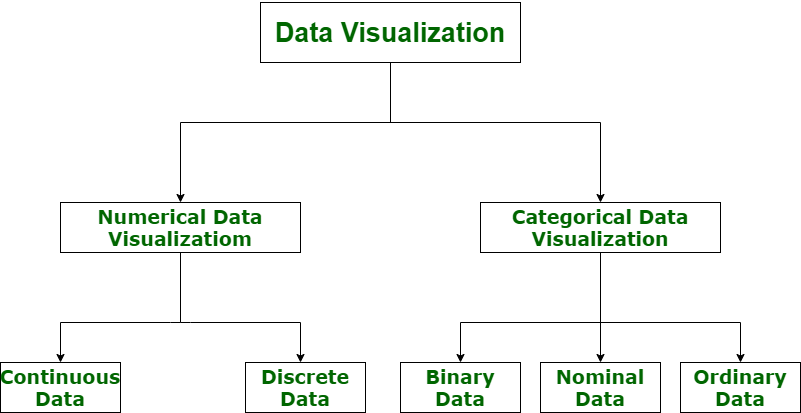

Types of Data for Visualization

Data visualization is categorized into the following categories:

- Numerical Data

- Categorical Data

Let’s understand the visualization of data via a diagram with its all categories.

Why is Data Visualization Important?

Let’s take an example. Suppose you compile visualization data of the company’s profits from 2013 to 2023 and create a line chart. It would be very easy to see the line going constantly up with a drop in just 2018. So you can observe in a second that the company has had continuous profits in all the years except a loss in 2018.

It would not be that easy to get this information so fast from a data table. This is just one demonstration of the usefulness of data visualization. Let’s see some more reasons why visualization of data is so important.

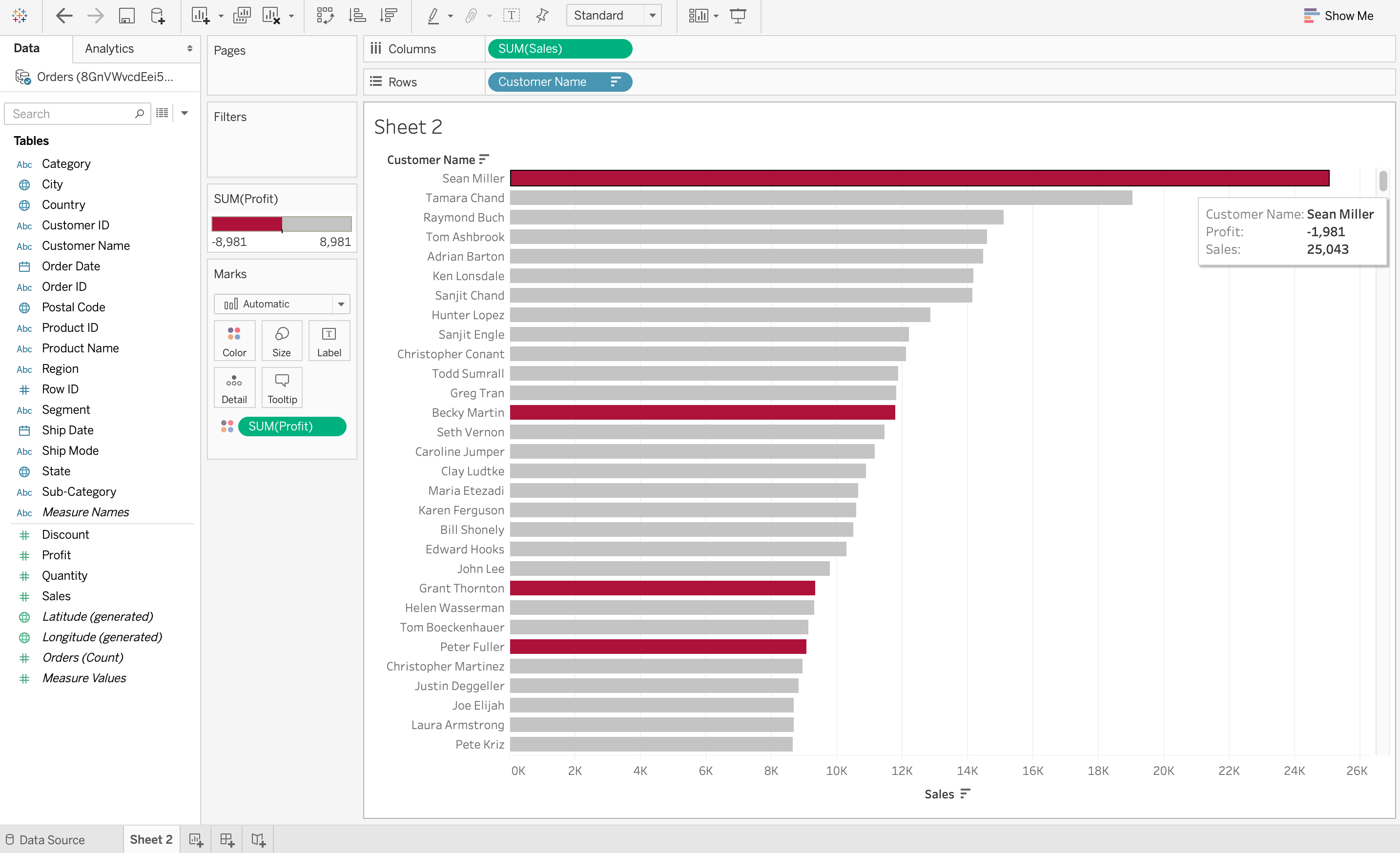

1. Data Visualization Discovers the Trends in Data

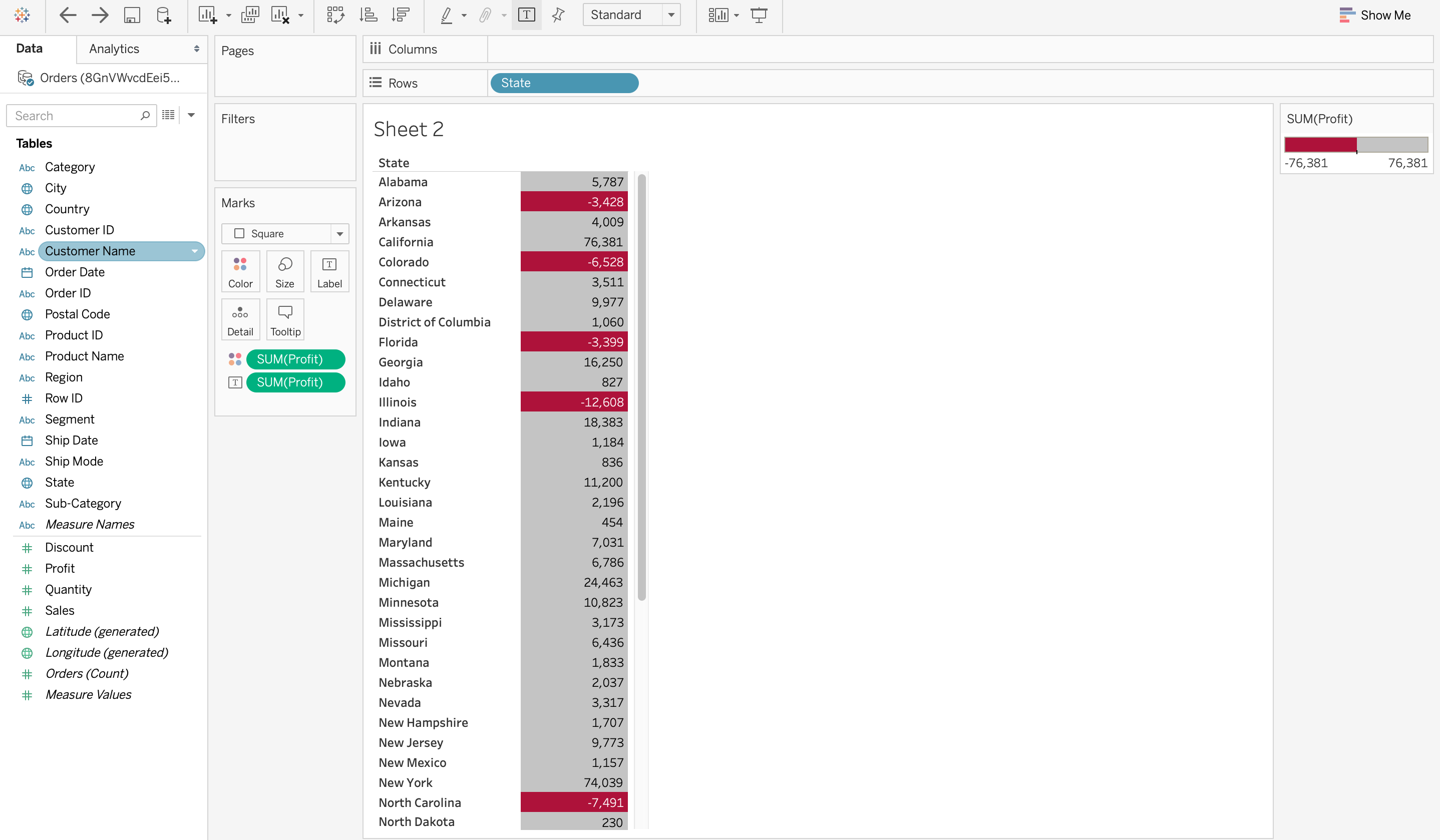

The most important thing that data visualization does is discover the trends in data. After all, it is much easier to observe data trends when all the data is laid out in front of you in a visual form as compared to data in a table. For example, the screenshot below on visualization on Tableau demonstrates the sum of sales made by each customer in descending order. However, the color red denotes loss while grey denotes profits. So it is very easy to observe from this visualization that even though some customers may have huge sales, they are still at a loss. This would be very difficult to observe from a table.

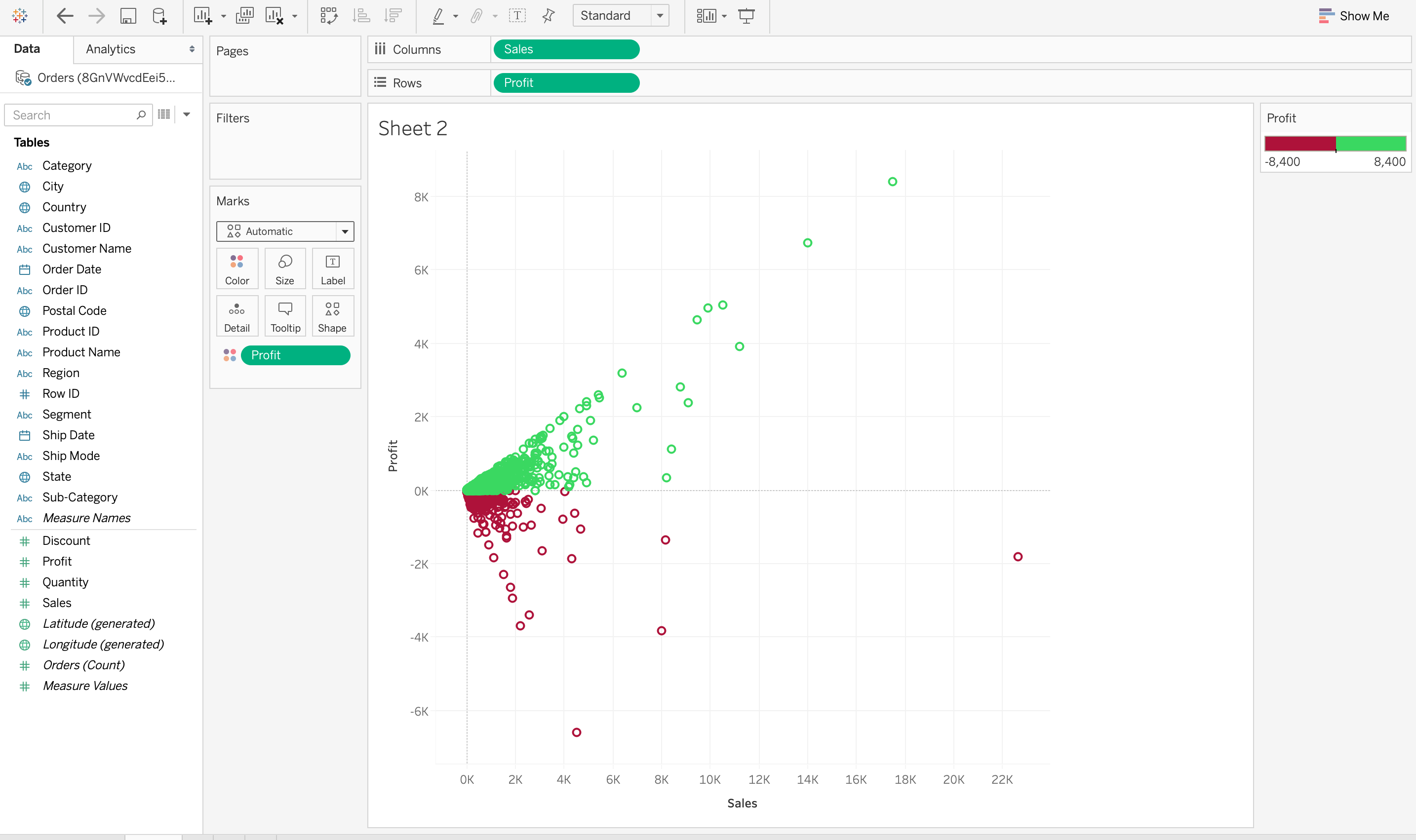

2. Data Visualization Provides a Perspective on the Data

Visualizing Data provides a perspective on data by showing its meaning in the larger scheme of things. It demonstrates how particular data references stand concerning the overall data picture. In the data visualization below, the data between sales and profit provides a data perspective concerning these two measures. It also demonstrates that there are very few sales above 12K and higher sales do not necessarily mean a higher profit.

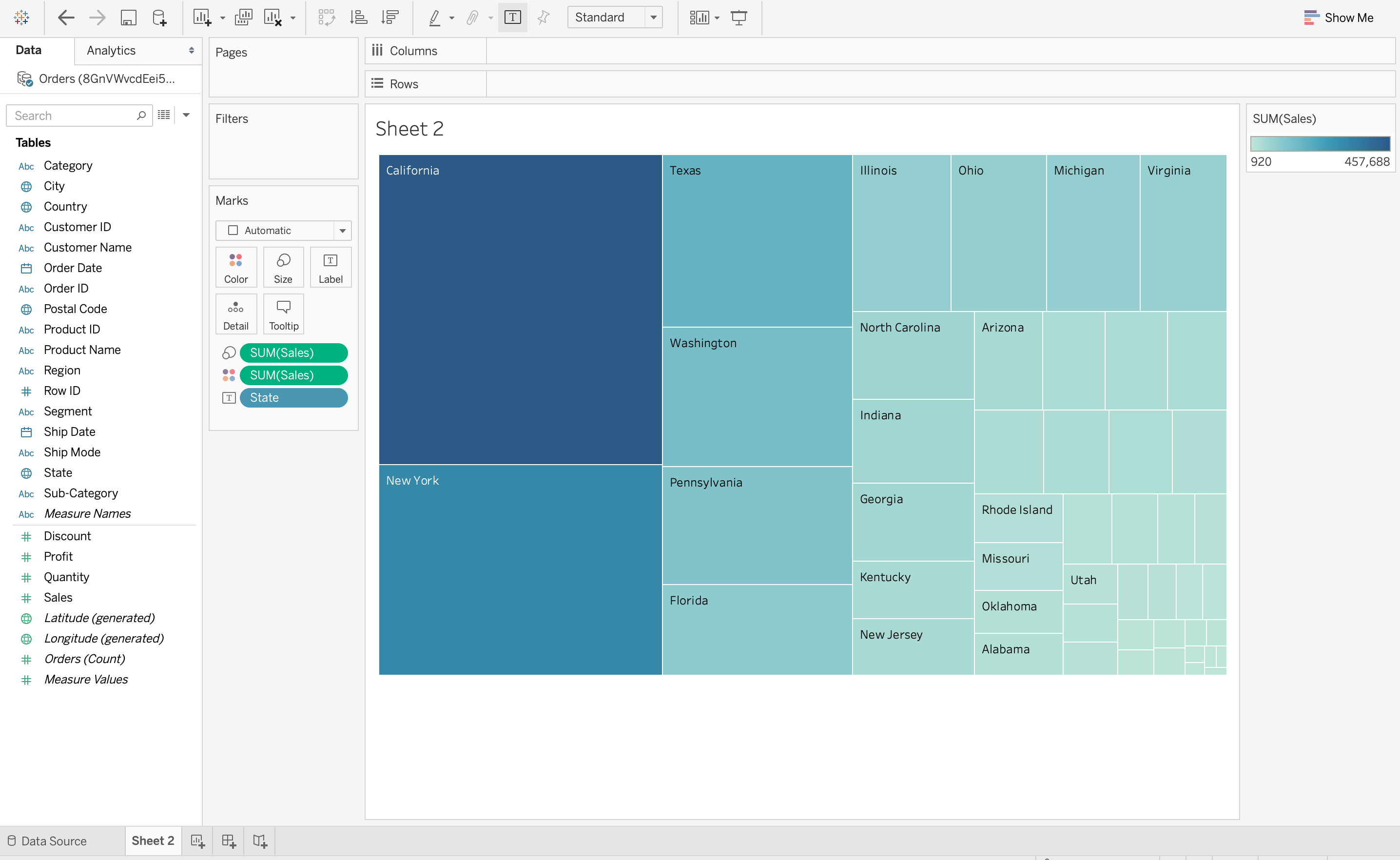

3. Data Visualization Puts the Data into the Correct Context

It isn’t easy to understand the context of the data with data visualization. Since context provides the whole circumstances of the data, it is very difficult to grasp by just reading numbers in a table. In the below data visualization on Tableau, a TreeMap is used to demonstrate the number of sales in each region of the United States. It is very easy to understand from this data visualization that California has the largest number of sales out of the total number since the rectangle for California is the largest. But this information is not easy to understand outside of context without visualizing data.

4. Data Visualization Saves Time

It is definitely faster to gather some insights from the data using data visualization rather than just studying a chart. In the screenshot below on Tableau, it is very easy to identify the states that have suffered a net loss rather than a profit. This is because all the cells with a loss are coloured red using a heat map, so it is obvious states have suffered a loss. Compare this to a normal table where you would need to check each cell to see if it has a negative value to determine a loss. Visualizing Data can save a lot of time in this situation!

5. Data Visualization Tells a Data Story

Data visualization is also a medium to tell a data story to the viewers. The visualization can be used to present the data facts in an easy-to-understand form while telling a story and leading the viewers to an inevitable conclusion. This data story, like any other type of story, should have a good beginning, a basic plot, and an ending that it is leading towards. For example, if a data analyst has to craft a data visualization for company executives detailing the profits of various products, then the data story can start with the profits and losses of multiple products and move on to recommendations on how to tackle the losses.

Now, that we have understood the basics of Data Visualization, along with its importance, now will be discussing the Advantages, Disadvantages and Data Science Pipeline (along with the diagram) which will help you to understand how data is compiled through various checkpoints.

Types of Data Visualization Techniques

Various types of visualizations cater to diverse data sets and analytical goals.

- Bar Charts: Ideal for comparing categorical data or displaying frequencies, bar charts offer a clear visual representation of values.

- Line Charts: Perfect for illustrating trends over time, line charts connect data points to reveal patterns and fluctuations.

- Pie Charts: Efficient for displaying parts of a whole, pie charts offer a simple way to understand proportions and percentages.

- Scatter Plots: Showcase relationships between two variables, identifying patterns and outliers through scattered data points.

- Histograms: Depict the distribution of a continuous variable, providing insights into the underlying data patterns.

- Heatmaps: Visualize complex data sets through color-coding, emphasizing variations and correlations in a matrix.

- Box Plots: Unveil statistical summaries such as median, quartiles, and outliers, aiding in data distribution analysis.

- Area Charts: Similar to line charts but with the area under the line filled, these charts accentuate cumulative data patterns.

- Bubble Charts: Enhance scatter plots by introducing a third dimension through varying bubble sizes, revealing additional insights.

- Treemaps: Efficiently represent hierarchical data structures, breaking down categories into nested rectangles.

- Violin Plots: Violin plots combine aspects of box plots and kernel density plots, providing a detailed representation of the distribution of data.

- Word Clouds: Word clouds are visual representations of text data where words are sized based on their frequency.

- 3D Surface Plots: 3D surface plots visualize three-dimensional data, illustrating how a response variable changes in relation to two predictor variables.

- Network Graphs: Network graphs represent relationships between entities using nodes and edges. They are useful for visualizing connections in complex systems, such as social networks, transportation networks, or organizational structures.

- Sankey Diagrams: Sankey diagrams visualize flow and quantity relationships between multiple entities. Often used in process engineering or energy flow analysis.

Visualization of data not only simplifies complex information but also enhances decision-making processes. Choosing the right type of visualization helps to unveil hidden patterns and trends within the data, making informed and impactful conclusions.

Tools for Visualization of Data

The following are the 10 best Data Visualization Tools

- Tableau

- Looker

- Zoho Analytics

- Sisense

- IBM Cognos Analytics

- Qlik Sense

- Domo

- Microsoft Power BI

- Klipfolio

- SAP Analytics Cloud

Advantages and Disadvantages of Data Visualization

Advantages of Data Visualization:

- Enhanced Comparison: Visualizing performances of two elements or scenarios streamlines analysis, saving time compared to traditional data examination.

- Improved Methodology: Representing data graphically offers a superior understanding of situations, exemplified by tools like Google Trends illustrating industry trends in graphical forms.

- Efficient Data Sharing: Visual data presentation facilitates effective communication, making information more digestible and engaging compared to sharing raw data.

- Sales Analysis: Data visualization aids sales professionals in comprehending product sales trends, identifying influencing factors through tools like heat maps, and understanding customer types, geography impacts, and repeat customer behaviors.

- Identifying Event Relations: Discovering correlations between events helps businesses understand external factors affecting their performance, such as online sales surges during festive seasons.

- Exploring Opportunities and Trends: Data visualization empowers business leaders to uncover patterns and opportunities within vast datasets, enabling a deeper understanding of customer behaviors and insights into emerging business trends.

Disadvantages of Data Visualization:

- Can be time-consuming: Creating visualizations can be a time-consuming process, especially when dealing with large and complex datasets.

- Can be misleading: While data visualization can help identify patterns and relationships in data, it can also be misleading if not done correctly. Visualizations can create the impression of patterns or trends that may not exist, leading to incorrect conclusions and poor decision-making.

- Can be difficult to interpret: Some types of visualizations, such as those that involve 3D or interactive elements, can be difficult to interpret and understand.

- May not be suitable for all types of data: Certain types of data, such as text or audio data, may not lend themselves well to visualization. In these cases, alternative methods of analysis may be more appropriate.

- May not be accessible to all users: Some users may have visual impairments or other disabilities that make it difficult or impossible for them to interpret visualizations. In these cases, alternative methods of presenting data may be necessary to ensure accessibility.

Best Practices for Visualization Data

Effective data visualization is crucial for conveying insights accurately. Follow these best practices to create compelling and understandable visualizations:

- Audience-Centric Approach: Tailor visualizations to your audience’s knowledge level, ensuring clarity and relevance. Consider their familiarity with data interpretation and adjust the complexity of visual elements accordingly.

- Design Clarity and Consistency: Choose appropriate chart types, simplify visual elements, and maintain a consistent color scheme and legible fonts. This ensures a clear, cohesive, and easily interpretable visualization.

- Contextual Communication: Provide context through clear labels, titles, annotations, and acknowledgments of data sources. This helps viewers understand the significance of the information presented and builds transparency and credibility.

- Engaging and Accessible Design: Design interactive features thoughtfully, ensuring they enhance comprehension. Additionally, prioritize accessibility by testing visualizations for responsiveness and accommodating various audience needs, fostering an inclusive and engaging experience.

Use-Cases and Applications of Data Visualization

1. Business Intelligence and Reporting

In the realm of Business Intelligence and Reporting, organizations leverage sophisticated tools to enhance decision-making processes. This involves the implementation of comprehensive dashboards designed for tracking key performance indicators (KPIs) and essential business metrics. Additionally, businesses engage in thorough trend analysis to discern patterns and anomalies within sales, revenue, and other critical datasets. These visual insights play a pivotal role in facilitating strategic decision-making, empowering stakeholders to respond promptly to market dynamics.

2. Financial Analysis

Financial Analysis in the corporate landscape involves the utilization of visual representations to aid in investment decision-making. Visualizing stock prices and market trends provides valuable insights for investors. Furthermore, organizations conduct comparative analyses of budgeted versus actual expenditures, gaining a comprehensive understanding of financial performance. Visualizations of cash flow and financial statements contribute to a clearer assessment of overall financial health, aiding in the formulation of robust financial strategies.

3. Healthcare

Within the Healthcare sector, the adoption of visualizations is instrumental in conveying complex information. Visual representations are employed to communicate patient outcomes and assess treatment efficacy, fostering a more accessible understanding for healthcare professionals and stakeholders. Moreover, visual depictions of disease spread and epidemiological data are critical in supporting public health efforts. Through visual analytics, healthcare organizations achieve efficient allocation and utilization of resources, ensuring optimal delivery of healthcare services.

4. Marketing and Sales

In the domain of Marketing and Sales, data visualization becomes a powerful tool for understanding customer behavior. Segmentation and behavior analysis are facilitated through visually intuitive charts, providing insights that inform targeted marketing strategies. Conversion funnel visualizations offer a comprehensive view of the customer journey, enabling organizations to optimize their sales processes. Visual analytics of social media engagement and campaign performance further enhance marketing strategies, allowing for more effective and targeted outreach.

5. Human Resources

Human Resources departments leverage data visualization to streamline processes and enhance workforce management. The development of employee performance dashboards facilitates efficient HR operations. Workforce demographics and diversity metrics are visually represented, supporting inclusive practices within organizations. Additionally, analytics for recruitment and retention strategies are enhanced through visual insights, contributing to more effective talent management.

Basic Charts for Data Visualization

Basic charts function foundational tools in information visualization, offering trustworthy insights into datasets. Best data visualization charts are:

- Bar Chart

- Line Chart

- Pie Chart

- Scatter Plot

- Histogram

These basic charts are the basis for deeper data analysis and are vital for conveying statistics correctly.

1. Bar Charts

Bar charts are one of the common visualization tool, used to symbolize and compare express facts by way of showing square bars. A bar chart has X and Y Axis where the X Axis represents one data and the Y axis represents another data. The top of the bar represents the title. Longer bars suggest better values.

There are various types of Bar charts like horizontal bar chart, Stacked bar chart, Grouped bar chart and Diverging bar Chart.

When to Use Bar Chart:

- Comparing Categories: Showcasing contrast among distinct categories to evaluate, summarize or discover relationship in the information.

- Ranking: When we’ve got records with categories that need to be ranked with highest to lowest.

- Relationship between categories: When you have a dataset with multiple specific variables, bar chart can help to display courting between them, to discover patterns and tendencies.

2. Line Charts

Line chart or Line graph is used to symbolize facts through the years series. It presentations records as a series of records points called as markers, connected with the aid of line segments showing the between values over the years. This chart is normally used to evaluate developments, view patterns or examine charge moves.

When to Use Line Chart:

- Line charts can be used to analyze developments over individual values.

- Line charts also are utilized in comparing trends among more than one facts series.

- Line chart is high-quality used for time series information.

3. Pie Charts

A pie chart is a round records visualization tool, this is divided into slices to symbolize numerical percentage or percentages of an entire. Each slice in pie chart corresponds to a category in the dataset and the perspective of the slice is proportional to the share it represents. Pie charts are only valid with small variety of categories. Simple Pie chart and Exploded Pie charts are distinctive varieties of Pie charts.

When to Use Pie Chart:

- Pie charts are used to show specific facts to expose the proportion of elements to the whole. It is used to depict how exclusive classes make up a total pleasant.

- Useful in eventualities where statistics has small range of classes.

- Useful in emphasizing a particular category by way of highlighting a dominant slice.

4. Scatter Chart (Plots)

A scatter chart or scatter plot chart is a effective information visualization device, makes use of dots to symbolize information factors. Scatter chart is used to display and examine variables which enables find courting between the ones variables. Scatter chart uses axes, X and Y. X-Axis represents one numerical variable and Y-axis represents another numerical variable. The variable on X-axis is independent and plotted against the dependent variable in Y-axis. Type of scatter chart consists of simple scatter chart, scatter chart with trendline and scatter chart with coloration coding.

When to Use Scatter Chart:

- Scatter charts are awesome for exploring dating between numerical variables and in identifying traits, outliers and subgroup variations.

- It is used while we’ve got to plot two sets of numerical statistics as one collection of X and Y coordinates.

- Scatter charts are satisfactory used for identifying outliers or unusual remark for your facts.

5. Histogram

A histogram represents the distribution of numerical facts by using dividing it into periods (packing containers) and displaying the frequency of records as bars. It is commonly used to visualize the underlying distribution of a dataset and discover styles inclusive of skewness, valuable tendency, and variability. Histograms are treasured gear for exploring facts distributions, detecting outliers, and assessing records great.

When to Use Histogram:

- Distribution Visualization: Histograms are best for visualizing the distribution of numerical information, allowing customers to recognize the unfold and shape of the records.

- Data Exploration: They facilitate records exploration by using revealing patterns, trends, and outliers inside datasets, aiding in hypothesis generation and information-pushed decision-making.

- Quality Control: Histograms assist assess statistics first-class by way of identifying anomalies, errors, or inconsistencies inside the facts distribution, enabling facts validation and cleaning strategies.

Advanced Charts for Data Visualization

Different types of data visualization charts, offer advanced charts that provide customers, many powerful tools to explore complicated datasets and extract precious insights. These superior charts empowers to analyze, interpret, and understand complex information structures and relationships efficiently.

- Heatmap

- Area Chart

- Box Plot (Box-and-Whisker Plot)

- Bubble Chart

- Tree Map

- Parallel Coordinates

- Choropleth Map

- Sankey Diagram

- Radar Chart (Spider Chart)

- Network Graph

- Donut Chart

- Gauge Chart

- Sunburst Chart

- Hexbin Plot

- Violin Plot

1. Heatmap

A heatmap visualizes statistics in a matrix layout the usage of colors to symbolize the values of person cells. It is good for figuring out patterns, correlations, and variations within big datasets. Heatmaps are usually utilized in fields together with finance for portfolio analysis, in biology for gene expression analysis, and in advertising for customer segmentation.

When to Use heatmap:

- Identify Clusters: Heatmaps help become aware of clusters or groups inside datasets, helping in segmentation and concentrated on techniques.

- Correlation Analysis: They are useful for visualizing correlations between variables, assisting to discover relationships and traits.

- Risk Assessment: Heatmaps are precious for chance assessment, which include figuring out high-hazard regions in monetary portfolios or detecting anomalies in community visitors.

2. Area Chart

An area chart displays data trends over time by filling the area beneath lines. It is effective for illustrating cumulative adjustments and comparing multiple classes simultaneously. Area charts are typically utilized in finance for monitoring stock prices, in weather technological know-how for visualizing temperature developments, and in challenge control for monitoring development through the years.

When to Use Area charts:

- Tracking Trends: Area charts are appropriate for tracking traits and adjustments over time, making them precious for historic records evaluation.

- Comparative Analysis: They permit for clean contrast of multiple classes or variables over the equal time period.

- Highlighting Patterns: Area charts assist spotlight styles, such as seasonality or cyclical tendencies, in time-collection facts.

3. Box Plot (Box-and-Whisker Plot)

A box plot provides a concise precis of the distribution of numerical facts, such as quartiles, outliers, and median values. It is beneficial for identifying variability, skewness, and capacity outliers in datasets. Box plots are typically utilized in statistical analysis, exceptional manipulate, and statistics exploration.

When to Use Box Plots:

- Identify Outliers: Box plots assist discover outliers and extreme values within datasets, helping in information cleansing and anomaly detection.

- Compare Distributions: They permit contrast of distributions between specific groups or categories, facilitating statistical analysis.

- Visualize Spread: Box plots visualize the spread and variability of information, providing insights into the distribution’s form and traits.

4. Bubble Chart

A bubble chart represents records points as bubbles, in which the dimensions and/or colour of every bubble deliver additional facts. It is powerful for visualizing three-dimensional facts and comparing more than one variables simultaneously. Bubble charts are commonly used in finance for portfolio evaluation, in marketing for market segmentation, and in biology for gene expression evaluation.

When to Use bubble chart:

- Multivariate Analysis: Bubble charts permit for multivariate evaluation, permitting the contrast of 3 or greater variables in a unmarried visualization.

- Size and Color Encoding: They leverage size and coloration encoding to deliver extra information, such as fee or class, enhancing records interpretation.

- Relationship Visualization: Bubble charts help visualize relationships between variables, facilitating pattern identification and fashion analysis.

5. Tree Map

A tree map presentations hierarchical facts the usage of nested rectangles, where the size of each rectangle represents a quantitative price. It is effective for visualizing hierarchical systems and comparing proportions in the hierarchy. Tree maps are generally utilized in finance for portfolio evaluation, in facts visualization for displaying report listing systems, and in advertising and marketing for visualizing marketplace share.

When to Use Tree Map:

- Hierarchical Representation: Tree maps excel at representing hierarchical records structures, making them suitable for visualizing organizational hierarchies or nested classes.

- Proportion Comparison: They permit comparison of proportions inside hierarchical systems, aiding in expertise relative sizes and contributions.

- Space Efficiency: Tree maps optimize area utilization by using packing rectangles efficiently, taking into account the visualization of large datasets in a compact layout.

6. Parallel Coordinates

Parallel coordinates visualize multivariate statistics through representing every information point as a line connecting values across multiple variables. They are useful for exploring relationships among variables and figuring out styles or trends. Parallel coordinates are generally used in data evaluation, gadget learning, and sample popularity.

When to Use Parallel Coordinates:

- Multivariate Analysis: Parallel coordinates enable the analysis of multiple variables simultaneously, facilitating sample identification and fashion evaluation.

- Relationship Visualization: They help visualize relationships among variables, such as correlations or clusters, making them precious for exploratory records analysis.

- Outlier Detection: Parallel coordinates resource in outlier detection by identifying facts factors that deviate from the general sample, assisting in anomaly detection and statistics validation.

7. Choropleth Map

A choropleth map uses shade shading or styles to symbolize statistical records aggregated over geographic regions. It is generally used to visualize spatial distributions or variations and identify geographic patterns. Choropleth maps are broadly used in fields which includes demography for populace density mapping, in economics for income distribution visualization, and in epidemiology for disease prevalence mapping.

When to Use Choropleth Map:

- Spatial Analysis: Choropleth maps are best for spatial analysis, permitting the visualization of spatial distributions or variations in records.

- Geographic Patterns: They help become aware of geographic styles, which include clusters or gradients, in datasets, aiding in fashion analysis and decision-making.

- Comparison Across Regions: Choropleth maps allow for clean evaluation of information values throughout one of a kind geographic regions, facilitating local evaluation and coverage planning.

8. Sankey Diagram

A Sankey diagram visualizes the flow of facts or assets among nodes the use of directed flows and varying widths of paths. It is useful for illustrating complex structures or methods and figuring out drift patterns or bottlenecks. Sankey diagrams are typically utilized in power glide evaluation, in deliver chain control for visualizing material flows, and in net analytics for consumer float evaluation.

When to Use Sankey Diagram:

- Flow Visualization: Sankey diagrams excel at visualizing the float of information or resources among nodes, making them valuable for information complex structures or processes.

- Bottleneck Identification: They help perceive bottlenecks or regions of inefficiency within structures by using visualizing flow paths and magnitudes.

- Comparative Analysis: Sankey diagrams permit evaluation of go with the flow patterns between distinct scenarios or time periods, assisting in overall performance evaluation and optimization.

9. Radar Chart (Spider Chart)

A radar chart shows multivariate information on a two-dimensional aircraft with a couple of axes emanating from a primary point. It is beneficial for comparing a couple of variables across distinct categories and identifying strengths and weaknesses. Radar charts are usually utilized in sports for overall performance analysis, in market studies for emblem perception mapping, and in selection-making for multi-criteria decision evaluation.

When to Use Radar Chart:

- Multi-Criteria Comparison: Radar charts permit for the evaluation of more than one criteria or variables across extraordinary classes, facilitating choice-making and prioritization.

- Strengths and Weaknesses Analysis: They assist discover strengths and weaknesses within categories or variables with the aid of visualizing their relative overall performance.

- Pattern Recognition: Radar charts useful resource in pattern recognition via highlighting similarities or variations between classes, assisting in fashion analysis and strategy development.

10. Network Graph

A network graph represents relationships between entities as nodes and edges. It is useful for visualizing complicated networks, consisting of social networks, transportation networks, and organic networks. Network graphs are typically utilized in social network analysis for community detection, in community safety for visualizing community traffic, and in biology for gene interaction analysis.

When to Use Network Graph:

- Relationship Visualization: Network graphs excel at visualizing relationships among entities, which includes connections or interactions, making them valuable for network analysis and exploration.

- Community Detection: They assist discover communities or clusters within networks by using visualizing node connections and densities.

- Path Analysis: Network graphs resource in route analysis by means of visualizing shortest paths or routes among nodes, facilitating course optimization and navigation.

11. Donut or Doughnut chart

A donut chart additionally known as doughnut chart is just like pie chart, but with a blank middle, which offers the arrival of a doughnut. This graphical view offers more aesthetically eye-catching and less cluttered illustration of multiple classes in a dataset.

The ring in the donut chart represents 100% and every class of records is represented with the aid of every slice. The region of every slice indicates how special categories make up a complete amount.

When to Use Donut Chart:

- The donut charts are useful in showing income figures, market proportion or to demonstrate marketing marketing campaign effects, customer segmentation or in similar use instances.

- Used to focus on a single variable and its progress.

- Useful to display components of a whole, showing how person classes make a contribution to an common total.

- Best used for comparing few classes.

12. Gauge Chart

A Gauge chart, one of the visualization tool used to show the progress of a single fee of statistics or key overall performance indicator (KPI) in the direction of a purpose or goal value. The Gauge chart usually displayed like a speedometer which displays facts in a circular arc. There two different kinds of Gauge charts specifically Circular Gauge or Radial Gauge which resembles a speedometer and Linear Gauge.

When to Use Gauge Chart:

Uses of Gauge charts include Goal Achievement, Monitoring Performance, Real-Time Updates and Visualizing Progress.

- Useful in monitoring metrics like income or consumer satisfaction towards benchmark signs set.

- Used in KPI monitoring in tracking development towards a selected aim indicator.

- Can be utilized in project control to music the fame of project progress against assignment timeline.

13. Sunburst Chart

A sunburst chart presents hierarchical records using nested rings, in which each ring represents a degree within the hierarchy. It is beneficial for visualizing hierarchical structures with more than one tiers of aggregation. Sunburst charts permit customers to explore relationships and proportions inside complicated datasets in an interactive and intuitive way.

When to use sunburst charts:

- Visualizing hierarchical data systems, including organizational hierarchies or nested classes.

- Exploring relationships and proportions within multi-level datasets.

- Communicating complex records structures and dependencies in a visually attractive layout.

14. Hexbin Plot

A hexbin plot represents the distribution of dimensional facts by using binning records points into hexagonal cells and coloring each cellular based totally on the range of factors it contains. It is effective for visualizing density in scatter plots with a huge wide variety of information points. Hexbin plots provide insights into spatial patterns and concentrations within datasets.

When to use Hexbin Plot:

- Visualizing the density and distribution of statistics points in two-dimensional area.

- Identifying clusters or concentrations of statistics inside a scatter plot.

- Handling massive datasets with overlapping data factors in a clear and informative way.

15. Violin Plot

A violin plot combines a box plot with a kernel density plot to show the distribution of statistics together with its summary statistics. It is useful for comparing the distribution of more than one organizations or categories. Violin plots provide insights into the shape, unfold, and important tendency of statistics distributions.

When to use Violin Plot:

- Comparing the distribution of continuous variables across distinctive groups or categories.

- Visualizing the shape and spread of information distributions, including skewness and multimodality.

- Presenting precis information and outliers within information distributions in a visually appealing layout.

Visualization Charts for Textual and Symbolic data

Data visualization charts types for textual and symbolic data symbolize facts that is basically composed of words, symbols, or other non-numeric bureaucracy. Some common visualization charts for textual and symbolic facts consist of:

- Word Cloud

- Pictogram Chart

These charts are particularly useful for studying textual facts, identifying key topics or subjects, visualizing keyword frequency, and highlighting enormous phrases or ideas in qualitative analysis or sentiment analysis.

1. Word Cloud

A word cloud is a visual representation of textual content records in which phrases are sized based totally on their frequency or significance inside the textual content. Common words seem larger and greater outstanding, at the same time as less common phrases are smaller. Word clouds provide a short and intuitive manner to identify distinguished phrases or issues within a frame of textual content.

When to use Word Cloud:

- Identifying key themes or subjects within a massive corpus of text.

- Visualizing keyword frequency or distribution in textual facts.

- Highlighting giant terms or principles in qualitative evaluation or sentiment evaluation.

2. Pictogram Chart

A pictogram chart makes use of icons or symbols to represent information values, wherein the size or amount of icons corresponds to the value they represent. It is an powerful way to deliver information in a visually appealing way, mainly when coping with categorical or qualitative records.

When to use pictograph chart:

- Presenting records in a visually enticing format, specially for non-numeric or qualitative records.

- Communicating information to audiences with various tiers of literacy or language talent.

- Emphasizing key statistics points or tendencies the usage of without difficulty recognizable symbols or icons.

Temporal and Trend Charts Data Visualization

Best Data visualization charts for Temporal and trend charts are visualization techniques used to investigate and visualize patterns, traits, and changes over time. These charts are mainly powerful for exploring time-series data, wherein information points are associated with particular timestamps or time periods. Temporal and trend charts provide insights into how statistics evolves over the years and assist perceive recurring styles, anomalies, and tendencies. Some common styles of temporal charts include:

- Line chart

- Streamgraph

- Bullet Graph

- Gantt Chart

- Waterfall Chart

1. Streamgraph

A streamgraph visualizes the trade in the composition of a dataset over time by using stacking regions alongside a baseline. It is useful for displaying trends and styles in temporal data at the same time as preserving continuity throughout time periods. Streamgraphs are especially effective for visualizing sluggish shifts or changes in data distribution over the years.

When to use streamplot:

- Analyzing trends and changes in facts distribution over the years.

- Comparing the relative contributions of different classes or organizations within a dataset.

- Highlighting patterns or fluctuations in facts through the years in a visually attractive manner.

2. Bullet Graph

A bullet graph is a variant of a bar chart designed to expose progress towards a aim or performance towards a target. It includes a single bar supplemented by reference traces and markers to provide context and comparison. Bullet graphs are beneficial for presenting key overall performance signs (KPIs) and monitoring progress toward goals.

When to use Bullet Graph:

- Displaying development toward goals or objectives in a concise and informative manner.

- Comparing real performance in opposition to predefined benchmarks or thresholds.

- Communicating overall performance metrics successfully in dashboards or reports.

3. Gantt Chart

A Gantt chart visualizes challenge schedules or timelines through representing duties or sports as horizontal bars along a time axis. It is beneficial for planning, scheduling, and monitoring progress in venture control. Gantt charts offer a visual evaluation of venture timelines, dependencies, and aid allocation.

When to use Gantt Chart:

- Planning and scheduling complicated tasks with multiple duties and dependencies.

- Tracking progress and managing resources at some stage in the mission lifecycle.

- Communicating undertaking timelines and milestones to stakeholders and team participants.

4. Waterfall Chart

A waterfall chart visualizes the cumulative impact of sequential high-quality and negative values on an preliminary starting point. It is generally utilized in financial analysis to show adjustments in net price over time. Waterfall charts provide a clean visual representation of the way individual factors make contributions to the general alternate in a dataset.

When to use waterfall chart:

- Analyzing and visualizing modifications in economic performance or budget allocations through the years.

- Identifying the sources of gains or losses within a dataset and their cumulative impact.

- Presenting complicated statistics ameliorations or calculations in a clear and concise layout.

Curse of Dimensionality

The Curse of Dimensionality in Machine Learning arises when working with high-dimensional data, leading to increased computational complexity, overfitting, and spurious correlations.

What is the Curse of Dimensionality?

- The Curse of Dimensionality refers to the phenomenon where the efficiency and effectiveness of algorithms deteriorate as the dimensionality of the data increases exponentially.

- In high-dimensional spaces, data points become sparse, making it challenging to discern meaningful patterns or relationships due to the vast amount of data required to adequately sample the space.

- The Curse of Dimensionality significantly impacts machine learning algorithms in various ways. It leads to increased computational complexity, longer training times, and higher resource requirements. Moreover, it escalates the risk of overfitting and spurious correlations, hindering the algorithms’ ability to generalize well to unseen data.

How to Overcome the Curse of Dimensionality?

To overcome the curse of dimensionality, you can consider the following strategies:

Dimensionality Reduction Techniques:

- Feature Selection: Identify and select the most relevant features from the original dataset while discarding irrelevant or redundant ones. This reduces the dimensionality of the data, simplifying the model and improving its efficiency.

- Feature Extraction: Transform the original high-dimensional data into a lower-dimensional space by creating new features that capture the essential information. Techniques such as Principal Component Analysis (PCA) and t-distributed Stochastic Neighbor Embedding (t-SNE) are commonly used for feature extraction.

Data Preprocessing:

- Normalization: Scale the features to a similar range to prevent certain features from dominating others, especially in distance-based algorithms.

- Handling Missing Values: Address missing data appropriately through imputation or deletion to ensure robustness in the model training process.

Python Implementation of Mitigating Curse Of Dimensionality

Here we are using the dataset uci-secom.

Import Necessary Libraries

Import required libraries including scikit-learn modules for dataset loading, model training, data preprocessing, dimensionality reduction, and evaluation.

import numpy as npimport pandas as pdfrom sklearn.feature_selection import SelectKBest, f_classif, VarianceThresholdfrom sklearn.decomposition import PCAfrom sklearn.model_selection import train_test_splitfrom sklearn.preprocessing import StandardScalerfrom sklearn.ensemble import RandomForestClassifierfrom sklearn.metrics import accuracy_scorefrom sklearn.impute import SimpleImputer

Loading the dataset

The Dataset is stored in a CSV file named 'your_dataset.csv', and have a timestamp column named 'Time' and a target variable column named 'Pass/Fail'.

df = pd.read_csv('your_dataset.csv')# Assuming 'X' contains your features and 'y' contains your target variableX = df.drop(columns=['Time', 'Pass/Fail'])y = df['Pass/Fail']

Remove Constant Features

- We are using

VarianceThresholdto remove constant features andSimpleImputerto impute missing values with the mean.

# Remove constant featuresselector = VarianceThreshold()X_selected = selector.fit_transform(X)# Impute missing valuesimputer = SimpleImputer(strategy='mean')X_imputed = imputer.fit_transform(X_selected)

Splitting the data and standardizing

# Split the data into training and test setsX_train, X_test, y_train, y_test = train_test_split(X_imputed, y, test_size=0.2, random_state=42)# Standardize the featuresscaler = StandardScaler()X_train_scaled = scaler.fit_transform(X_train)X_test_scaled = scaler.transform(X_test)

Feature Selection and Dimensionality Reduction

- Feature Selection:

SelectKBestis used to select the top k features based on a specified scoring function (f_classifin this case). It selects the features that are most likely to be related to the target variable. - Dimensionality Reduction:

PCA(Principal Component Analysis) is then used to further reduce the dimensionality of the selected features. It transforms the data into a lower-dimensional space while retaining as much variance as possible.

# Perform feature selectionselector_kbest = SelectKBest(score_func=f_classif, k=20)X_train_selected = selector_kbest.fit_transform(X_train_scaled, y_train)X_test_selected = selector_kbest.transform(X_test_scaled)# Perform dimensionality reductionpca = PCA(n_components=10)X_train_pca = pca.fit_transform(X_train_selected)X_test_pca = pca.transform(X_test_selected)

Training the classifiers

- Training Before Dimensionality Reduction: Train a Random Forest classifier (

clf_before) on the original scaled features (X_train_scaled) without dimensionality reduction. - Evaluation Before Dimensionality Reduction: Make predictions (

y_pred_before) on the test set (X_test_scaled) using the classifier trained before dimensionality reduction, and calculate the accuracy (accuracy_before) of the model. - Training After Dimensionality Reduction: Train a new Random Forest classifier (

clf_after) on the reduced feature set (X_train_pca) after dimensionality reduction. - Evaluation After Dimensionality Reduction: Make predictions (

y_pred_after) on the test set (X_test_pca) using the classifier trained after dimensionality reduction, and calculate the accuracy (accuracy_after) of the model.

# Train a classifier (e.g., Random Forest) without dimensionality reductionclf_before = RandomForestClassifier(n_estimators=100, random_state=42)clf_before.fit(X_train_scaled, y_train)# Make predictions and evaluate the model before dimensionality reductiony_pred_before = clf_before.predict(X_test_scaled)accuracy_before = accuracy_score(y_test, y_pred_before)print(f'Accuracy before dimensionality reduction: {accuracy_before}')# Train a classifier (e.g., Random Forest) on the reduced feature setclf_after = RandomForestClassifier(n_estimators=100, random_state=42)clf_after.fit(X_train_pca, y_train)# Make predictions and evaluate the model after dimensionality reductiony_pred_after = clf_after.predict(X_test_pca)accuracy_after = accuracy_score(y_test, y_pred_after)print(f'Accuracy after dimensionality reduction: {accuracy_after}')

OUTPUT:Accuracy before dimensionality reduction: 0.8745Accuracy after dimensionality reduction: 0.9235668789808917

Correlation Analysis

Correlation analysis is a statistical technique for determining the strength of a link between two variables. It is used to detect patterns and trends in data and to forecast future occurrences.

- Consider a problem with different factors to be considered for making optimal conclusions

- Correlation explains how these variables are dependent on each other.

- Correlation quantifies how strong the relationship between two variables is. A higher value of the correlation coefficient implies a stronger association.

- The sign of the correlation coefficient indicates the direction of the relationship between variables. It can be either positive, negative, or zero.

What is Correlation?

The Pearson correlation coefficient is the most often used metric of correlation. It expresses the linear relationship between two variables in numerical terms. The Pearson correlation coefficient, written as “r,” is as follows:

r=∑(xi−xˉ)2∑(yi−yˉ)2∑(xi−xˉ)(yi−yˉ)

where,

- r: Correlation coefficient

- : ith value first dataset X

- : Mean of first dataset X

- : ith value second dataset Y

- : Mean of second dataset Y

r = -1 indicates a perfect negative correlation.

r = 0 indicates no linear correlation between the variables.

r = 1 indicates a perfect positive correlation.

r = 0 indicates no linear correlation between the variables.

r = 1 indicates a perfect positive correlation.

Types of Correlation

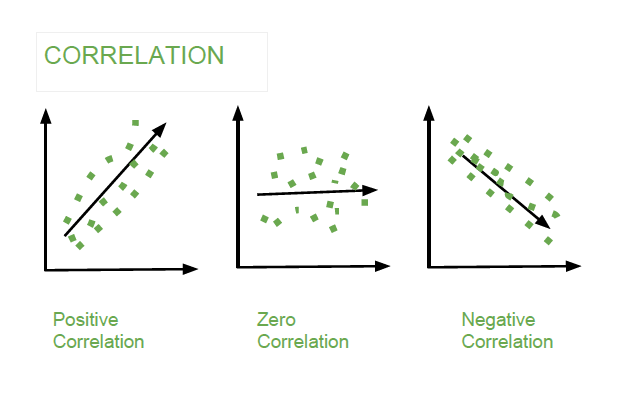

There are three types of correlation:

- Positive Correlation: Positive correlation indicates that two variables have a direct relationship. As one variable increases, the other variable also increases. For example, there is a positive correlation between height and weight. As people get taller, they also tend to weigh more.

- Negative Correlation: Negative correlation indicates that two variables have an inverse relationship. As one variable increases, the other variable decreases. For example, there is a negative correlation between price and demand. As the price of a product increases, the demand for that product decreases.

- Zero Correlation: Zero correlation indicates that there is no relationship between two variables. The changes in one variable do not affect the other variable. For example, there is zero correlation between shoe size and intelligence.

A positive correlation indicates that the two variables move in the same direction, while a negative correlation indicates that the two variables move in opposite directions.

The strength of the correlation is measured by a correlation coefficient, which can range from -1 to 1. A correlation coefficient of 0 indicates no correlation, while a correlation coefficient of 1 or -1 indicates a perfect correlation.

Correlation Coefficients

The different types of correlation coefficients used to measure the relation between two variables are:

Correlation Coefficient | Type of Relation | Levels of Measurement | Data Distribution |

|---|---|---|---|

Pearson Correlation Coefficient | Linear | Interval/Ratio | Normal distribution |

Spearman Rank Correlation Coefficient | Non-Linear | Ordinal | Any distribution |

Kendall Tau Coefficient | Non-Linear | Ordinal | Any distribution |

Phi Coefficient | Non-Linear | Nominal vs. Nominal (nominal with 2 categories (dichotomous)) | Any distribution |

Cramer’s V | Non-Linear | Two nominal variables | Any distribution |

How to Conduct Correlation Analysis

To conduct a correlation analysis, you will need to follow these steps:

- Identify Variable: Identify the two variables that we want to correlate. The variables should be quantitative, meaning that they can be represented by numbers.

- Collect data : Collect data on the two variables. We can collect data from a variety of sources, such as surveys, experiments, or existing records.

- Choose the appropriate correlation coefficient. The Pearson correlation coefficient is the most commonly used correlation coefficient, but there are other correlation coefficients that may be more appropriate for certain types of data.

- Calculate the correlation coefficient. We can use a statistical software package to calculate the correlation coefficient, or you can use a formula.

- Interpret the correlation coefficient. The correlation coefficient can be interpreted as a measure of the strength and direction of the linear relationship between the two variables.

Implementations

Python provides libraries such as “NumPy” and “Pandas” which have various methods to ease various calculations, including correlation analysis.

Using NumPy

import numpy as np

# Create sample datax = np.array([1, 2, 3, 4, 5])y = np.array([5, 7, 3, 9, 1])

# Calculate correlation coefficientcorrelation_coefficient = np.corrcoef(x, y)

print("Correlation Coefficient:", correlation_coefficient)Output:

Correlation Coefficient: [[ 1. -0.3][-0.3 1. ]]

Using pandas

import pandas as pd

# Create a DataFrame with sample datadata = pd.DataFrame({'X': [1, 2, 3, 4, 5], 'Y': [5, 7, 3, 9, 1]})

# Calculate correlation coefficientcorrelation_coefficient = data['X'].corr(data['Y'])

print("Correlation Coefficient:", correlation_coefficient)Output:

Correlation Coefficient: -0.3

Interpretation of Correlation coefficients

- Perfect: 0.80 to 1.00

- Strong: 0.50 to 0.79

- Moderate: 0.30 to 0.49

- Weak: 0.00 to 0.29

Value greater than 0.7 is considered a strong correlation between variables.

Applications of Correlation Analysis

Correlation Analysis is an important tool that helps in better decision-making, enhances predictions and enables better optimization techniques across different fields. Predictions or decision making dwell on the relation between the different variables to produce better results, which can be achieved by correlation analysis.

The various fields in which it can be used are:

- Economics and Finance : Help in analyzing the economic trends by understanding the relations between supply and demand.

- Business Analytics : Helps in making better decisions for the company and provides valuable insights.

- Market Research and Promotions : Helps in creating better marketing strategies by analyzing the relation between recent market trends and customer behavior.

- Medical Research : Correlation can be employed in Healthcare so as to better understand the relation between different symptoms of diseases and understand genetical diseases better.

- Weather Forecasts: Analyzing the correlation between different variables so as to predict weather.

- Better Customer Service : Helps in better understand the customers and significantly increases the quality of customer service.

- Environmental Analysis: help create better environmental policies by understanding various environmental factors.

Advantages of Correlation Analysis

- Correlation analysis helps us understand how two variables affect each other or are related to each other.

- They are simple and very easy to interpret.

- Aids in decision-making process in business, healthcare, marketing, etc

- Helps in feature selection in machine learning.

- Gives a measure of the relation between two variables.

Disadvantages of Correlation Analysis

- Correlation does not imply causation, which means a variable may not be the cause for the other variable even though they are correlated.

- If outliers are not dealt with well they may cause errors.

- It works well only on bivariate relations and may not produce accurate results for multivariate relations.

- Complex relations can not be analyzed accurately.

Principal Component Analysis

Principal Component Analysis is an unsupervised learning algorithm that is used for the dimensionality reduction in machine learning. It is a statistical process that converts the observations of correlated features into a set of linearly uncorrelated features with the help of orthogonal transformation. These new transformed features are called the Principal Components. It is one of the popular tools that is used for exploratory data analysis and predictive modeling. It is a technique to draw strong patterns from the given dataset by reducing the variances.

PCA generally tries to find the lower-dimensional surface to project the high-dimensional data.

PCA works by considering the variance of each attribute because the high attribute shows the good split between the classes, and hence it reduces the dimensionality. Some real-world applications of PCA are image processing, movie recommendation system, optimizing the power allocation in various communication channels. It is a feature extraction technique, so it contains the important variables and drops the least important variable.

The PCA algorithm is based on some mathematical concepts such as:

- Variance and Covariance

- Eigenvalues and Eigen factors

Some common terms used in PCA algorithm:

- Dimensionality: It is the number of features or variables present in the given dataset. More easily, it is the number of columns present in the dataset.

- Correlation: It signifies that how strongly two variables are related to each other. Such as if one changes, the other variable also gets changed. The correlation value ranges from -1 to +1. Here, -1 occurs if variables are inversely proportional to each other, and +1 indicates that variables are directly proportional to each other.

- Orthogonal: It defines that variables are not correlated to each other, and hence the correlation between the pair of variables is zero.

- Eigenvectors: If there is a square matrix M, and a non-zero vector v is given. Then v will be eigenvector if Av is the scalar multiple of v.

- Covariance Matrix: A matrix containing the covariance between the pair of variables is called the Covariance Matrix.

Principal Components in PCA

As described above, the transformed new features or the output of PCA are the Principal Components. The number of these PCs are either equal to or less than the original features present in the dataset. Some properties of these principal components are given below:

- The principal component must be the linear combination of the original features.

- These components are orthogonal, i.e., the correlation between a pair of variables is zero.

- The importance of each component decreases when going to 1 to n, it means the 1 PC has the most importance, and n PC will have the least importance.

Steps for PCA algorithm

- Getting the dataset

Firstly, we need to take the input dataset and divide it into two subparts X and Y, where X is the training set, and Y is the validation set. - Representing data into a structure

Now we will represent our dataset into a structure. Such as we will represent the two-dimensional matrix of independent variable X. Here each row corresponds to the data items, and the column corresponds to the Features. The number of columns is the dimensions of the dataset. - Standardizing the data

In this step, we will standardize our dataset. Such as in a particular column, the features with high variance are more important compared to the features with lower variance.

If the importance of features is independent of the variance of the feature, then we will divide each data item in a column with the standard deviation of the column. Here we will name the matrix as Z. - Calculating the Covariance of Z

To calculate the covariance of Z, we will take the matrix Z, and will transpose it. After transpose, we will multiply it by Z. The output matrix will be the Covariance matrix of Z. - Calculating the Eigen Values and Eigen Vectors

Now we need to calculate the eigenvalues and eigenvectors for the resultant covariance matrix Z. Eigenvectors or the covariance matrix are the directions of the axes with high information. And the coefficients of these eigenvectors are defined as the eigenvalues. - Sorting the Eigen Vectors

In this step, we will take all the eigenvalues and will sort them in decreasing order, which means from largest to smallest. And simultaneously sort the eigenvectors accordingly in matrix P of eigenvalues. The resultant matrix will be named as P*. - Calculating the new features Or Principal Components

Here we will calculate the new features. To do this, we will multiply the P* matrix to the Z. In the resultant matrix Z*, each observation is the linear combination of original features. Each column of the Z* matrix is independent of each other. - Remove less or unimportant features from the new dataset.

The new feature set has occurred, so we will decide here what to keep and what to remove. It means, we will only keep the relevant or important features in the new dataset, and unimportant features will be removed out.

Applications of Principal Component Analysis

- PCA is mainly used as the dimensionality reduction technique in various AI applications such as computer vision, image compression, etc.

- It can also be used for finding hidden patterns if data has high dimensions. Some fields where PCA is used are Finance, data mining, Psychology, etc.

Principal Component Regression (PCR)

Principal Component Regression (PCR) is a statistical technique for regression analysis that is used to reduce the dimensionality of a dataset by projecting it onto a lower-dimensional subspace. This is done by finding a set of orthogonal (i.e., uncorrelated) linear combinations of the original variables, called principal components, that capture the most variance in the data. The principal components are used as predictors in the regression model, instead of the original variables.

PCR is often used as an alternative to multiple linear regression, especially when the number of variables is large or when the variables are correlated. By using PCR, we can reduce the number of variables in the model and improve the interpretability and stability of the regression results.

Features of the Principal Component Regression (PCR)

Here are some key features of Principal Component Regression (PCR):

- PCR reduces the dimensionality of a dataset by projecting it onto a lower-dimensional subspace, using a set of orthogonal linear combinations of the original variables called principal components.

- PCR is often used as an alternative to multiple linear regression, especially when the number of variables is large or when the variables are correlated.

- By using PCR, we can reduce the number of variables in the model and improve the interpretability and stability of the regression results.

- To perform PCR, we first need to standardize the original variables and then compute the principal components using singular value decomposition (SVD) or eigendecomposition of the covariance matrix of the standardized data.

- The principal components are then used as predictors in a linear regression model, whose coefficients can be estimated using least squares regression or maximum likelihood estimation.

Breaking down the Math behind Principal Component Regression (PCR)

Here is a brief overview of the mathematical concepts underlying Principal Component Regression (PCR):

- Dimensionality reduction: PCR reduces the dimensionality of a dataset by projecting it onto a lower-dimensional subspace, using a set of orthogonal linear combinations of the original variables called principal components. This is a way of summarizing the data by capturing the most important patterns and relationships in the data while ignoring noise and irrelevant information.

- Principal components: The principal components of a dataset are the orthogonal linear combinations of the original variables that capture the most variance in the data. They are obtained by performing singular value decomposition (SVD) or eigendecomposition of the covariance matrix of the standardized data. The number of principal components is typically chosen to be the number of variables, but it can be reduced if there is a large amount of collinearity among the variables.

- Linear regression: PCR uses the principal components as predictors in a linear regression model, whose coefficients can be estimated using least squares regression or maximum likelihood estimation. The fitted model can then be used to make predictions on new data.

Overall, PCR uses mathematical concepts from linear algebra and statistics to reduce the dimensionality of a dataset and improve the interpretability and stability of regression results.

Limitations of Principal Component Regression (PCR)

While Principal Component Regression (PCR) has many advantages, it also has some limitations that should be considered when deciding whether to use it for a particular regression analysis:

- PCR only works well with linear relationships: PCR assumes that the relationship between the predictors and the response variable is linear. If the relationship is non-linear, PCR may not be able to accurately capture it, leading to biased or inaccurate predictions. In such cases, non-linear regression methods may be more appropriate.

- PCR does not handle outliers well: PCR is sensitive to outliers in the data, which can have a disproportionate impact on the principal components and the fitted regression model. Therefore, it is important to identify and handle outliers in the data before applying PCR.

- PCR may not be interpretable: PCR involves a complex mathematical procedure that generates a set of orthogonal linear combinations of the original variables. These linear combinations may not be easily interpretable, especially if the number of variables is large. In contrast, multiple linear regression is more interpretable, since it uses the original variables directly as predictors.

- PCR may not be efficient: PCR is computationally intensive, especially when the number of variables is large. Therefore, it may not be the most efficient method for regression analysis, especially when the dataset is large. In such cases, faster and more efficient regression methods may be more appropriate.

Overall, while PCR has many advantages, it is important to carefully consider its limitations and potential drawbacks before using it for regression analysis.

How Principal Component Regression (PCR) is compared to other regression analysis techniques?

Principal Component Regression (PCR) is often compared to other regression analysis techniques, such as multiple linear regression, principal component analysis (PCA), and partial least squares regression (PLSR). Here are some key differences between PCR and these other techniques:

- PCR vs. multiple linear regression: PCR is similar to multiple linear regression, in that both techniques use linear regression to model the relationship between a set of predictors and a response variable. However, PCR differs from multiple linear regression in that it reduces the dimensionality of the data by projecting it onto a lower-dimensional subspace using the principal components. This can improve the interpretability and stability of the regression results, especially when the number of variables is large or when the variables are correlated.

- PCR vs. PCA: PCR is similar to PCA, in that both techniques use principal components to reduce the dimensionality of the data. However, PCR differs from PCA in that it uses the principal components as predictors in a linear regression model, whereas PCA is an unsupervised technique that only analyzes the structure of the data itself, without using a response variable.

- PCR vs. PLSR: PCR is similar to PLSR, in that both techniques use principal components to reduce the dimensionality of the data and improve the interpretability and stability of the regression results. However, PCR differs from PLSR in that it uses the principal components as predictors in a linear regression model, whereas PLSR uses a weighted combination of the original variables as predictors in a partial least squares regression model. This allows PLSR to better capture non-linear relationships between the predictors and the response variable.

Overall, PCR is a useful technique for regression analysis that can be compared to multiple linear regression, PCA, and PLSR, depending on the specific characteristics of the data and the goals of the analysis.

CART (Classification And Regression Tree) in Machine Learning

CART( Classification And Regression Trees) is a variation of the decision tree algorithm. It can handle both classification and regression tasks. Scikit-Learn uses the Classification And Regression Tree (CART) algorithm to train Decision Trees (also called “growing” trees). CART was first produced by Leo Breiman, Jerome Friedman, Richard Olshen, and Charles Stone in 1984.

CART(Classification And Regression Tree) for Decision Tree

CART is a predictive algorithm used in Machine learning and it explains how the target variable’s values can be predicted based on other matters. It is a decision tree where each fork is split into a predictor variable and each node has a prediction for the target variable at the end.

The term CART serves as a generic term for the following categories of decision trees:

- Classification Trees: The tree is used to determine which “class” the target variable is most likely to fall into when it is continuous.

- Regression trees: These are used to predict a continuous variable’s value.

In the decision tree, nodes are split into sub-nodes based on a threshold value of an attribute. The root node is taken as the training set and is split into two by considering the best attribute and threshold value. Further, the subsets are also split using the same logic. This continues till the last pure sub-set is found in the tree or the maximum number of leaves possible in that growing tree.

CART Algorithm

Classification and Regression Trees (CART) is a decision tree algorithm that is used for both classification and regression tasks. It is a supervised learning algorithm that learns from labelled data to predict unseen data.

- Tree structure: CART builds a tree-like structure consisting of nodes and branches. The nodes represent different decision points, and the branches represent the possible outcomes of those decisions. The leaf nodes in the tree contain a predicted class label or value for the target variable.

- Splitting criteria: CART uses a greedy approach to split the data at each node. It evaluates all possible splits and selects the one that best reduces the impurity of the resulting subsets. For classification tasks, CART uses Gini impurity as the splitting criterion. The lower the Gini impurity, the more pure the subset is. For regression tasks, CART uses residual reduction as the splitting criterion. The lower the residual reduction, the better the fit of the model to the data.

- Pruning: To prevent overfitting of the data, pruning is a technique used to remove the nodes that contribute little to the model accuracy. Cost complexity pruning and information gain pruning are two popular pruning techniques. Cost complexity pruning involves calculating the cost of each node and removing nodes that have a negative cost. Information gain pruning involves calculating the information gain of each node and removing nodes that have a low information gain.

How does CART algorithm works?

The CART algorithm works via the following process:

- The best-split point of each input is obtained.

- Based on the best-split points of each input in Step 1, the new “best” split point is identified.

- Split the chosen input according to the “best” split point.

- Continue splitting until a stopping rule is satisfied or no further desirable splitting is available.

CART algorithm uses Gini Impurity to split the dataset into a decision tree .It does that by searching for the best homogeneity for the sub nodes, with the help of the Gini index criterion.

Gini index/Gini impurity

The Gini index is a metric for the classification tasks in CART. It stores the sum of squared probabilities of each class. It computes the degree of probability of a specific variable that is wrongly being classified when chosen randomly and a variation of the Gini coefficient. It works on categorical variables, provides outcomes either “successful” or “failure” and hence conducts binary splitting only.

The degree of the Gini index varies from 0 to 1,

- Where 0 depicts that all the elements are allied to a certain class, or only one class exists there.

- The Gini index of value 1 signifies that all the elements are randomly distributed across various classes, and

- A value of 0.5 denotes the elements are uniformly distributed into some classes.

Mathematically, we can write Gini Impurity as follows:

where Pi is the probability of an object being classified to a particular class.

CART for Classification

A classification tree is an algorithm where the target variable is categorical. The algorithm is then used to identify the “Class” within which the target variable is most likely to fall. Classification trees are used when the dataset needs to be split into classes that belong to the response variable(like yes or no)

For classification in decision tree learning algorithm that creates a tree-like structure to predict class labels. The tree consists of nodes, which represent different decision points, and branches, which represent the possible result of those decisions. Predicted class labels are present at each leaf node of the tree.

How Does CART for Classification Work?

CART for classification works by recursively splitting the training data into smaller and smaller subsets based on certain criteria. The goal is to split the data in a way that minimizes the impurity within each subset. Impurity is a measure of how mixed up the data is in a particular subset. For classification tasks, CART uses Gini impurity

- Gini Impurity- Gini impurity measures the probability of misclassifying a random instance from a subset labeled according to the majority class. Lower Gini impurity means more purity of the subset.

- Splitting Criteria- The CART algorithm evaluates all potential splits at every node and chooses the one that best decreases the Gini impurity of the resultant subsets. This process continues until a stopping criterion is reached, like a maximum tree depth or a minimum number of instances in a leaf node.

CART for Regression

A Regression tree is an algorithm where the target variable is continuous and the tree is used to predict its value. Regression trees are used when the response variable is continuous. For example, if the response variable is the temperature of the day.

CART for regression is a decision tree learning method that creates a tree-like structure to predict continuous target variables. The tree consists of nodes that represent different decision points and branches that represent the possible outcomes of those decisions. Predicted values for the target variable are stored in each leaf node of the tree.

How Does CART works for Regression?

Regression CART works by splitting the training data recursively into smaller subsets based on specific criteria. The objective is to split the data in a way that minimizes the residual reduction in each subset.

- Residual Reduction- Residual reduction is a measure of how much the average squared difference between the predicted values and the actual values for the target variable is reduced by splitting the subset. The lower the residual reduction, the better the model fits the data.

- Splitting Criteria- CART evaluates every possible split at each node and selects the one that results in the greatest reduction of residual error in the resulting subsets. This process is repeated until a stopping criterion is met, such as reaching the maximum tree depth or having too few instances in a leaf node.

Pseudo-code of the CART algorithm

d = 0, endtree = 0

Note(0) = 1, Node(1) = 0, Node(2) = 0

while endtree < 1

if Node(2d-1) + Node(2d) + .... + Node(2d+1-2) = 2 - 2d+1

endtree = 1

else

do i = 2d-1, 2d, .... , 2d+1-2

if Node(i) > -1

Split tree

else

Node(2i+1) = -1

Node(2i+2) = -1

end if

end do

end if

d = d + 1

end whileCART model representation

CART models are formed by picking input variables and evaluating split points on those variables until an appropriate tree is produced.

Steps to create a Decision Tree using the CART algorithm:

- Greedy algorithm: In this The input space is divided using the Greedy method which is known as a recursive binary spitting. This is a numerical method within which all of the values are aligned and several other split points are tried and assessed using a cost function.

- Stopping Criterion: As it works its way down the tree with the training data, the recursive binary splitting method described above must know when to stop splitting. The most frequent halting method is to utilize a minimum amount of training data allocated to every leaf node. If the count is smaller than the specified threshold, the split is rejected and also the node is considered the last leaf node.

- Tree pruning: Decision tree’s complexity is defined as the number of splits in the tree. Trees with fewer branches are recommended as they are simple to grasp and less prone to cluster the data. Working through each leaf node in the tree and evaluating the effect of deleting it using a hold-out test set is the quickest and simplest pruning approach.

- Data preparation for the CART: No special data preparation is required for the CART algorithm.

Decision Tree CART Implementations

Here is the code implements the CART algorithm for classifying fruits based on their color and size. It first encodes the categorical data using a LabelEncoder and then trains a CART classifier on the encoded data. Finally, it predicts the fruit type for a new instance and decodes the result back to its original categorical value.

from sklearn.tree import DecisionTreeClassifier

from sklearn.preprocessing import LabelEncoder

# Define the features and target variable

features = [

["red", "large"],

["green", "small"],

["red", "small"],

["yellow", "large"],

["green", "large"],

["orange", "large"],

]

target_variable = ["apple", "lime", "strawberry", "banana", "grape", "orange"]

# Flatten the features list for encoding

flattened_features = [item for sublist in features for item in sublist]

# Use a single LabelEncoder for all features and target variable

le = LabelEncoder()

le.fit(flattened_features + target_variable)

# Encode features and target variable

encoded_features = [le.transform(item) for item in features]

encoded_target = le.transform(target_variable)

# Create a CART classifier

clf = DecisionTreeClassifier()

# Train the classifier on the training set

clf.fit(encoded_features, encoded_target)

# Predict the fruit type for a new instance

new_instance = ["red", "large"]

encoded_new_instance = le.transform(new_instance)

predicted_fruit_type = clf.predict([encoded_new_instance])

decoded_predicted_fruit_type = le.inverse_transform(predicted_fruit_type)

print("Predicted fruit type:", decoded_predicted_fruit_type[0])Output:

Predicted fruit type: applePOPULAR CART-BASED ALGORITHMS:

- CART (Classification and Regression Trees): The original algorithm that uses binary splits to build decision trees.

- C4.5 and C5.0: Extensions of CART that allow for multiway splits and handle categorical variables more effectively.

- Random Forests: Ensemble methods that use multiple decision trees (often CART) to improve predictive performance and reduce overfitting.

- Gradient Boosting Machines (GBM): Boosting algorithms that also use decision trees (often CART) as base learners, sequentially improving model performance.

Advantages of CART

- Results are simplistic.

- Classification and regression trees are Nonparametric and Nonlinear.

- Classification and regression trees implicitly perform feature selection.

- Outliers have no meaningful effect on CART.

- It requires minimal supervision and produces easy-to-understand models.

Limitations of CART

- Overfitting.

- High Variance.

- low bias.

- the tree structure may be unstable.

Applications of the CART algorithm

- For quick Data insights.

- In Blood Donors Classification.

- For environmental and ecological data.

- In the financial sectors.

Evaluation Metrics in Machine Learning

Classification Metrics

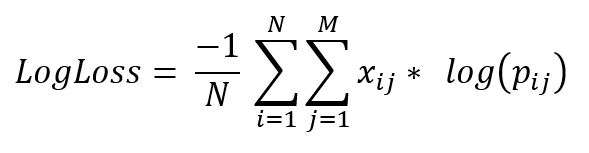

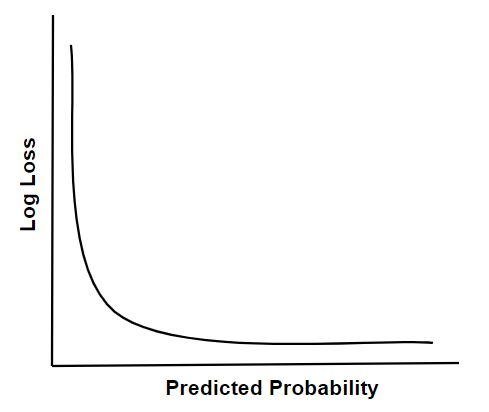

In a classification task, the main task is to predict the target variable which is in the form of discrete values. To evaluate the performance of such a model there are metrics as mentioned below:

- Classification Accuracy

- Logarithmic loss

- Area under Curve

- F1 score

- Precision

- Recall

- Confusion Matrix

Regression Evaluation Metrics

In the regression task, the work is to predict the target variable which is in the form of continuous values. To evaluate the performance of such a model below mentioned evaluation metrics are used:

- Mean Absolute Error

- Mean Squared Error

- Root Mean Square Error

- Root Mean Square Logarithmic Error

- R2 – Score

Techniques To Evaluate Accuracy of Classifier in Data Mining